Why

First off all, why does this website exist in the first place?

I had the problem of starting projects, but them never reaching the light of day, because they either weren't fully finished or didn't really have any use case, because there were better alternatives already out there.

So on the one hand this website is a project I finished and put out there for everyone to see, and on the other hand it gives me a platform to showcase my other work I'll be doing in the future.

Design

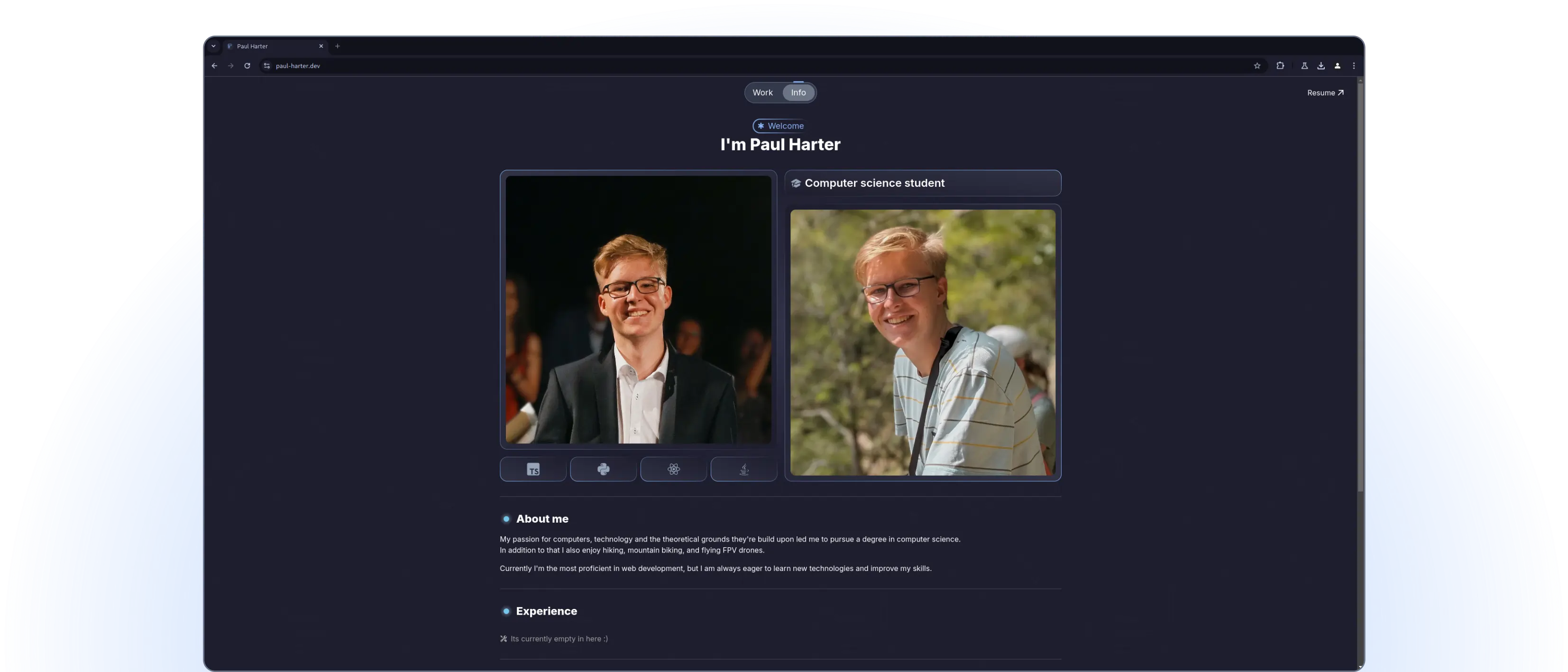

I'm no designer, but I will say I have an opinion of what looks good to me and what not, so with this website I tried my best to make it look as good as possible.

But of course i took inspiration from other sources, and credit where credit is due, here are the places I took inspiration from:

- Perry Wang's website

- Catppuccin color palette

- Google's Material 3

How does it work

This website is a relatively simple static site, so there isn't that much intersting stuff worth mentioning here, but I think I can give insights in two "pretty" intersting points.

Markdown based posting system

To make it as easy as possible to create a new project post, it's currently implemented in a way so that the only thing I need to do is to create a new Markdown file a upload a new banner picture.

At build time these markdown files get converted into native HTML or React components, so that there is no overhead for the user. It uses a npm-package called next-mdx-remote which builds on top of MDX.

Maybe a bit overkill but it works really well and if I want to store all my static files elsewhere it makes it really easy to do so.

The creation process of a new post looks something like this (simplified):

Search engine optimization

This was the first time I put some effort in SEO, it's a rabbit hole of its own and I'm pretty sure I didn't reach the bottom of it, but here are the things I did:

Metadata:

If you take a look inside the header of the about page you will see a loot of meta tags, here is a short snippet of that:

...

<meta property="og:title" content="Paul Harter"/>

<meta property="og:description" content="Thats me. [...]"/>

<meta property="og:image" content="https://paul-harter.dev/paul1.webp"/>

...

- There are Open Graph meta tags, which provide "rich" metadata for other sites, especially social platforms, to use. Most important for embedded links to show a thumbnail and give a short description

- There are the standard title, description and favicon tags used by the browser

- And some special tags, for example the tags for twitter

Sitemaps and crawlers:

The website provides a sitemap.xml to make it easier for webcrawlers to crawl the site. In this case after building the website a script is run to create this sitemap automatically (next-sitemap).

It also provides a robots.txt to tell the crawlers which page they are allowed to crawl.

Lighthouse:

Using Google's lighthouse I tried to optimize the page as much as possible. Using optimized image formats, made the site more accessible, reduced loading time where possible, ...

But the site still isn't perfect, especially on mobile where the requirements are harder than on desktop.